Table of contents

What are Namespaces and Services in K8s?

In Kubernetes, Namespaces and Services are fundamental concepts used to organize and manage containerized applications and their networking within a Kubernetes cluster.

Let's explore each of these concepts:

Namespaces:

Namespaces provide a way to logically partition and isolate resources within a Kubernetes cluster.

They are like virtual clusters within a single physical cluster. Each namespace acts as a separate environment, allowing you to avoid naming conflicts and manage resources more effectively.

Some key points about namespaces:

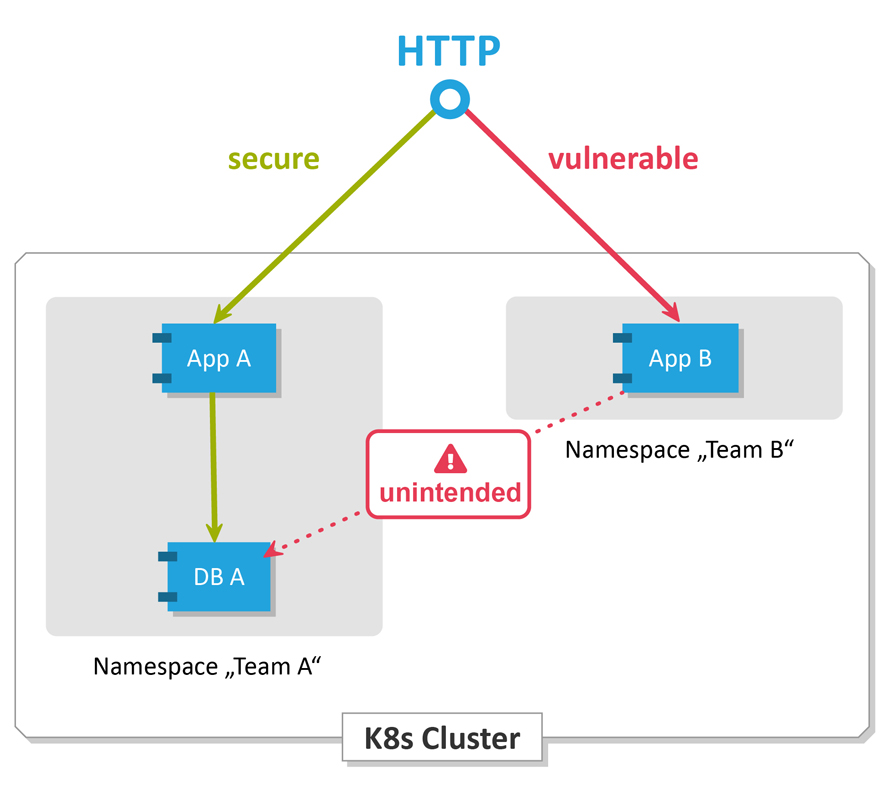

Isolation: Namespaces provide a level of isolation, allowing you to run multiple applications or teams within the same cluster without interfering with each other.

Resource Quotas: You can set resource quotas at the namespace level to control and limit the amount of CPU, memory, and other resources that applications within a namespace can consume.

Security Policies: Security policies and network policies can be applied at the namespace level to control network traffic and access between resources.

Scoping: Many Kubernetes resources (e.g., Pods, Services, ConfigMaps, Secrets) can be created within a specific namespace, and they are only accessible to other resources within the same namespace by default.

Common use cases for namespaces include separating development, testing, and production environments or isolating different teams or projects within a shared Kubernetes cluster.

Services:

In Kubernetes, Services are an abstraction that defines a logical set of Pods and a policy for accessing them.

They enable network connectivity and load balancing for Pods, allowing applications to communicate with each other or with external clients without needing to know the specific IP addresses or details of individual Pods.

There are several types of Services:

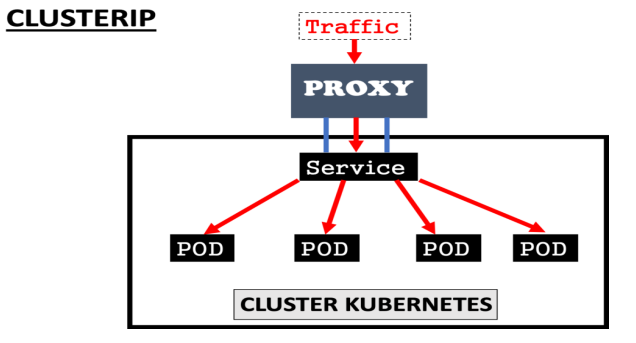

ClusterIP: This is the default type. It exposes the Service on an internal IP address within the cluster. It's useful for internal communication between Pods within the cluster.

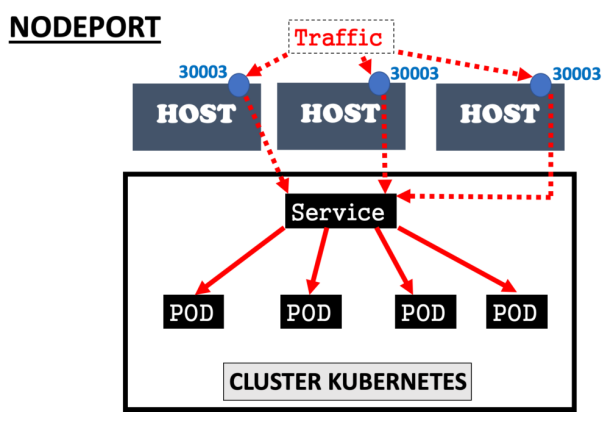

NodePort: This type exposes the Service on a static port on each Node's IP address. It's often used for external access to services running within the cluster.

LoadBalancer: This type provisions an external load balancer in cloud environments (e.g., AWS ELB, GCP Load Balancer) to distribute traffic to the Service. It's typically used for public-facing services.

ExternalName: This type allows you to map a Service to an external DNS name. It's used for accessing services outside the cluster.

Services play a crucial role in providing network connectivity and load balancing within a Kubernetes cluster, making it easier to build scalable and resilient applications.

Task 1:

Create a Namespace for your Deployment

Use the command

kubectl create namespace <namespace-name>to create a NamespaceUpdate the deployment.yml file to include the Namespace

Apply the updated deployment using the command:

kubectl apply -f deployment.yml -n <namespace-name>Verify that the Namespace has been created by checking the status of the Namespaces in your cluster.

step 1: Setup an k8s cluster, Check for in-built namespace in master-node.

# For convinience you can use

alias k=kubectl

k get nodes # to check nodes

k get ns # to check in-built namespace

k create ns zero-to-hero # creates the namespace

k get ns

step2: Create deployment.yml specifying the namespace, containe name,image, container-port.

step3: Create th deployment.

step4: Now if you check the pod and deployments without namespace, there are no resources found. We need to mention the ns name using -n <namespace_name> where the workloads are created.

k get pods -n zero-to-hero # checks the pods created in that ns

k get deploy -n zero-to-hero # checks the deployments created in that ns

Task 2:

- Read about Services, Load Balancing, and Networking in Kubernetes.

Services, Load Balancing, and Networking are essential components of Kubernetes that enable applications to run reliably and efficiently in a containerized environment. Let's explore each of these concepts in more detail:

Services:

In Kubernetes, a Service is an abstract way to expose an application running on a set of Pods as a network service.

It provides a stable endpoint (IP address and port) that other applications within or outside the cluster can use to communicate with the Pods.

There are several types of Services in Kubernetes:

ClusterIP:

This is the default type of Service. It exposes the Service only within the cluster. It's suitable for internal communication between Pods.

NodePort:

This type exposes the Service on a specific port on each Node in the cluster. It enables external access to the Service by connecting to any Node's IP address and the NodePort.

LoadBalancer: This type is used to provision an external load balancer (like AWS Elastic Load Balancer or GCP Load Balancer) that distributes traffic to the Service. It's mainly used for exposing services externally.

ExternalName: This type maps the Service to an external DNS name, rather than an IP address and port. It's typically used for integrating with external services.

Load Balancing:

![Kubernetes] ว่าด้วยเรื่อง Services แต่ละประเภท | by Thanwa Jindarattana | Medium](https://miro.medium.com/v2/1*P-10bQg_1VheU9DRlvHBTQ.png)

Load balancing in Kubernetes ensures that incoming traffic to a Service is evenly distributed across multiple Pods.

This improves the availability and scalability of applications.

Kubernetes provides two primary methods for load balancing:

Service Load Balancing: Kubernetes Services inherently distribute traffic evenly among the Pods backing the Service. This is achieved using kube-proxy, which is a network proxy that runs on each Node and load balances traffic to the Service's Pods.

Ingress:

Ingress is a Kubernetes resource that acts as an entry point for HTTP and HTTPS traffic into the cluster. It provides more advanced routing and load balancing capabilities compared to the basic Service load balancing. Ingress controllers (e.g., Nginx Ingress Controller, Traefik) are used to implement Ingress rules and manage traffic routing to different Services based on rules, paths, or hostnames.

Networking:

Networking in Kubernetes is a complex topic due to its container-centric nature. Kubernetes offers several networking components and concepts:

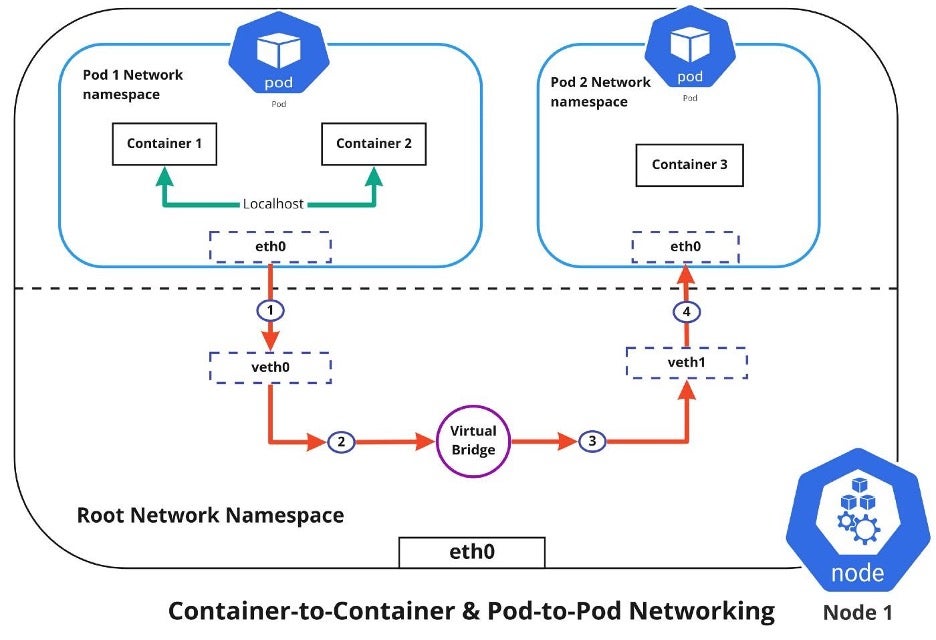

Pod Networking:

Each Pod in Kubernetes gets its unique IP address. Containers within the same Pod can communicate using localhost, while communication between Pods is achieved through network routing.

Service Networking:

As mentioned earlier, Services provide a stable network endpoint for Pods. Kubernetes ensures that the traffic to a Service is properly routed to the correct Pods, even if they move or scale.

Network Policies:

Network Policies allow you to define rules that control the traffic flow between Pods. They can be used to enforce security policies and segmentation within the cluster.

CNI (Container Network Interface): Kubernetes relies on CNI plugins to manage networking between Pods. Popular CNI plugins include Calico, Flannel, and Weave.

Services, Load Balancing, and Networking are crucial aspects of Kubernetes that enable the deployment and management of containerized applications while ensuring they are accessible, reliable, and secure.

Understanding how these components work together is essential for effectively deploying and scaling applications in a Kubernetes cluster.

I hope you find the blog post helpful! If you do, don't forget to like 👍, comment 💬, and share 📢 it with your friends and colleagues.